OpenAI has introduced some new features to its language models (LLMs), GPT-4, and gpt-3.5-turbo, which may be interesting for developers who utilize them in their applications or other projects.

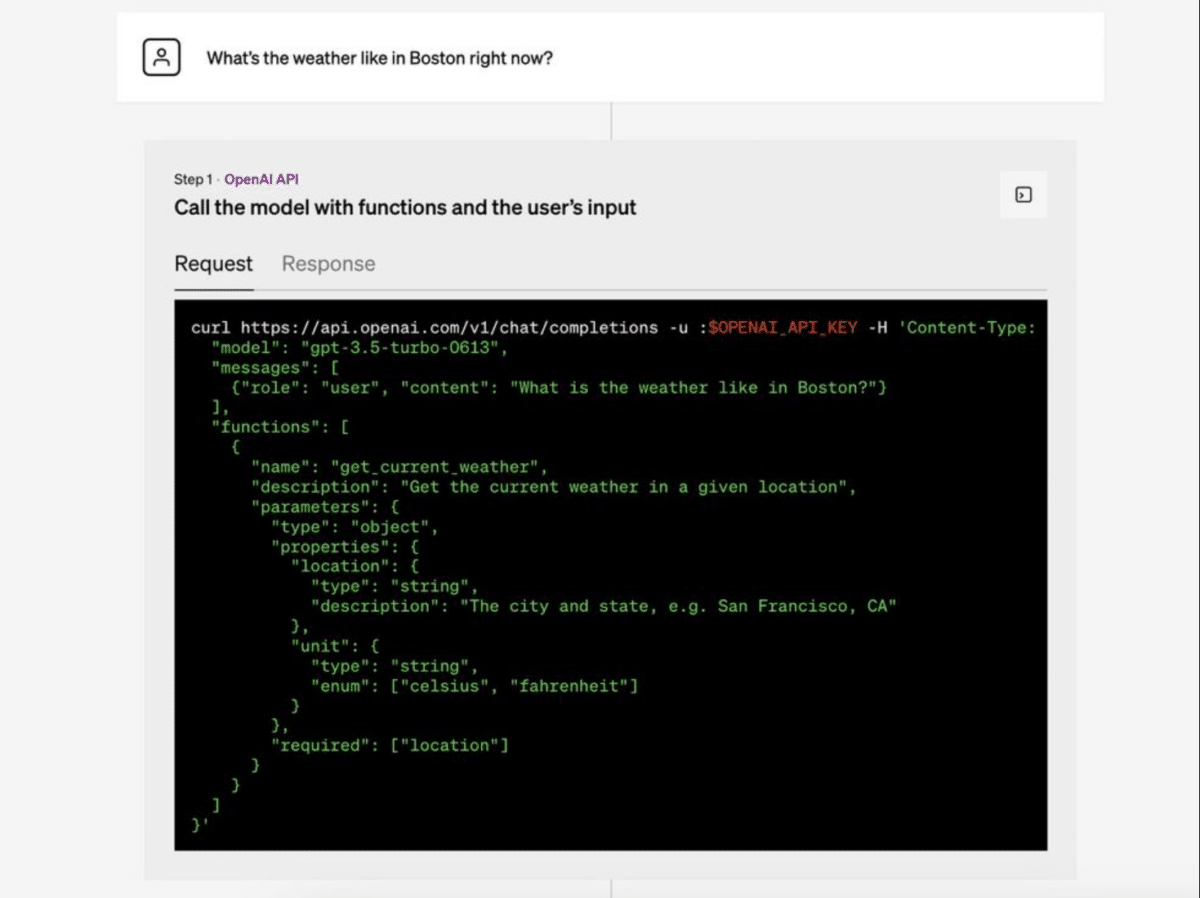

The updates primarily involve enhancements to the API that developers use to communicate with OpenAI’s GPT models. One of the news is that function calls are now possible through the GPT models’ APIs. This will facilitate developers’ chatbots in incorporating data from external functions or database queries when responding to user queries.

It will also be possible to send significantly more parameters to gpt-3.5-turbo. The new version is called “gpt-3.5-turbo-16k,” and OpenAI describes it as follows: “gpt-3.5-turbo-16k offers 4 times the context length of gpt-3.5-turbo at twice the price: $0.003 per 1K input tokens and $0.004 per 1K output tokens. 16k context means the model can now support ~20 pages of text in a single request.”

GPT-4 already has the capability to handle a 32k context, but it is not yet publicly available. OpenAI also announced that it will be cheaper to use their APIs. The details of the new pricing can be found in the link below for those interested.