During this year’s Computex in Taiwan, Nvidia showcased technology that can provide non-playable characters (NPCs) in games with the ability to engage in dialogue with the player. The technology is called the Avatar Cloud Engine (ACE) and utilizes generative AI and voice synthesis to allow players to converse with NPCs.

As seen in the demo clip above, players can ask questions and have a conversation with an NPC using their own voice, and the NPC responds using voice synthesis. Nvidia discusses player dialogue with NPCs.

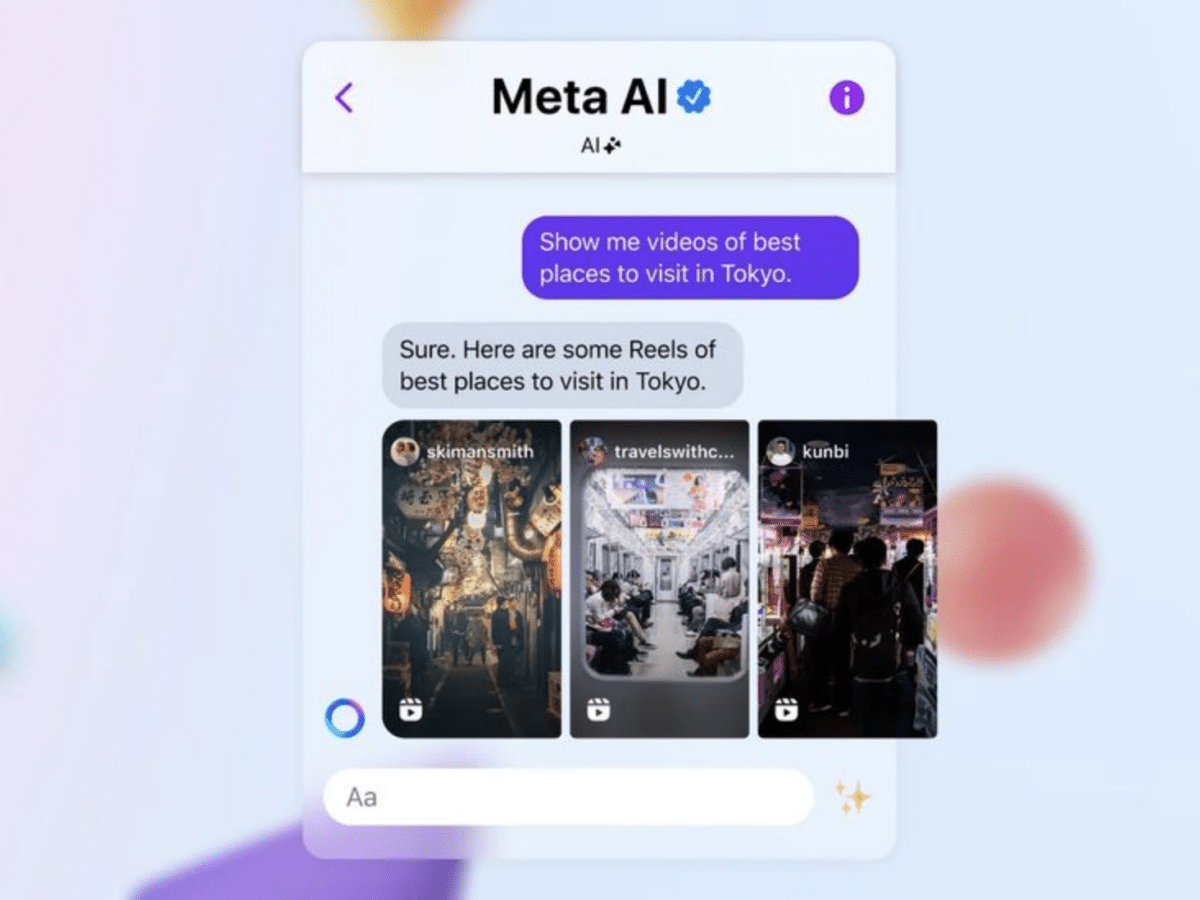

The creation of non-playable characters (NPCs) has evolved as games have become more sophisticated. The number of pre-recorded lines has grown, the number of options a player has to interact with NPCs has increased, and facial animations have become more realistic.

Yet player interactions with NPCs still tend to be transactional, scripted, and short-lived, as dialogue options exhaust quickly, serving only to push the story forward. Now, generative AI can make NPCs more intelligent by improving their conversational skills, creating persistent personalities that evolve over time, and enabling dynamic responses that are unique to the player.

Nvidia’s ACE includes their tools and platforms such as NeMo, which is used to handle large language models (LLMs) in games, Riva, which is used for speech recognition and text-to-speech conversion, and Omniverse Audio2Face, which is used to animate characters’ faces to synchronize with spoken dialogue.

Nvidia has collaborated with Convai, a company specializing in creating AI characters for games and virtual worlds, to create the demo for ACE showcased above in Unreal Engine 5.